Credits for t8=t4=t2=5.000 seriously?

Message boards :

Number crunching :

Credits for t8=t4=t2=5.000 seriously?

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 7 Feb 20 Posts: 10 Credit: 6,625,400 RAC: 0 |

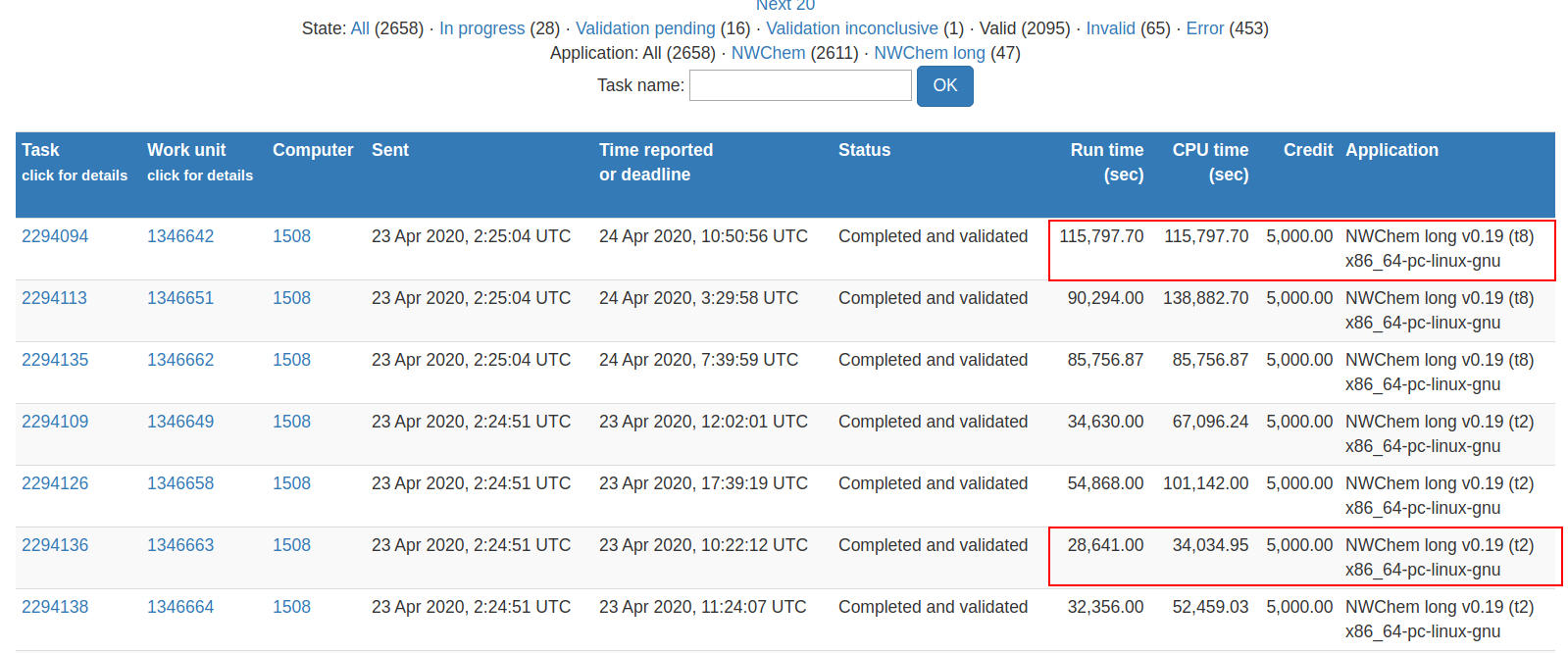

Recently returned back to project, saw new NWChem Long tasks. I dedicated 20 threads and after jumping back and forth server started to give me primarily t8 tasks. It is really surprising that bigger tasks give you less reward :)

|

|

Send message Joined: 7 Nov 19 Posts: 31 Credit: 4,245,903 RAC: 0 |

It does not look your cpu used 8 threads for red circled task because runtime=cputime. Runtime should be way lower than cputime, as you see on t2 tasks. A reason could be that your cpu is running too many processes/tasks so that every one claims 8 threads, but it uses only 1. |

|

Send message Joined: 7 Feb 20 Posts: 10 Credit: 6,625,400 RAC: 0 |

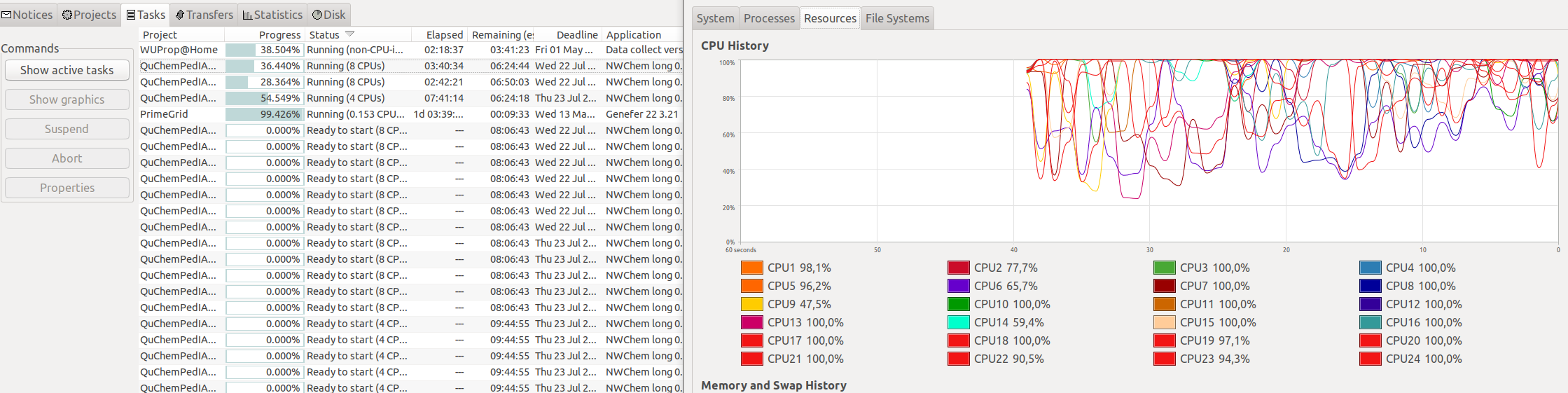

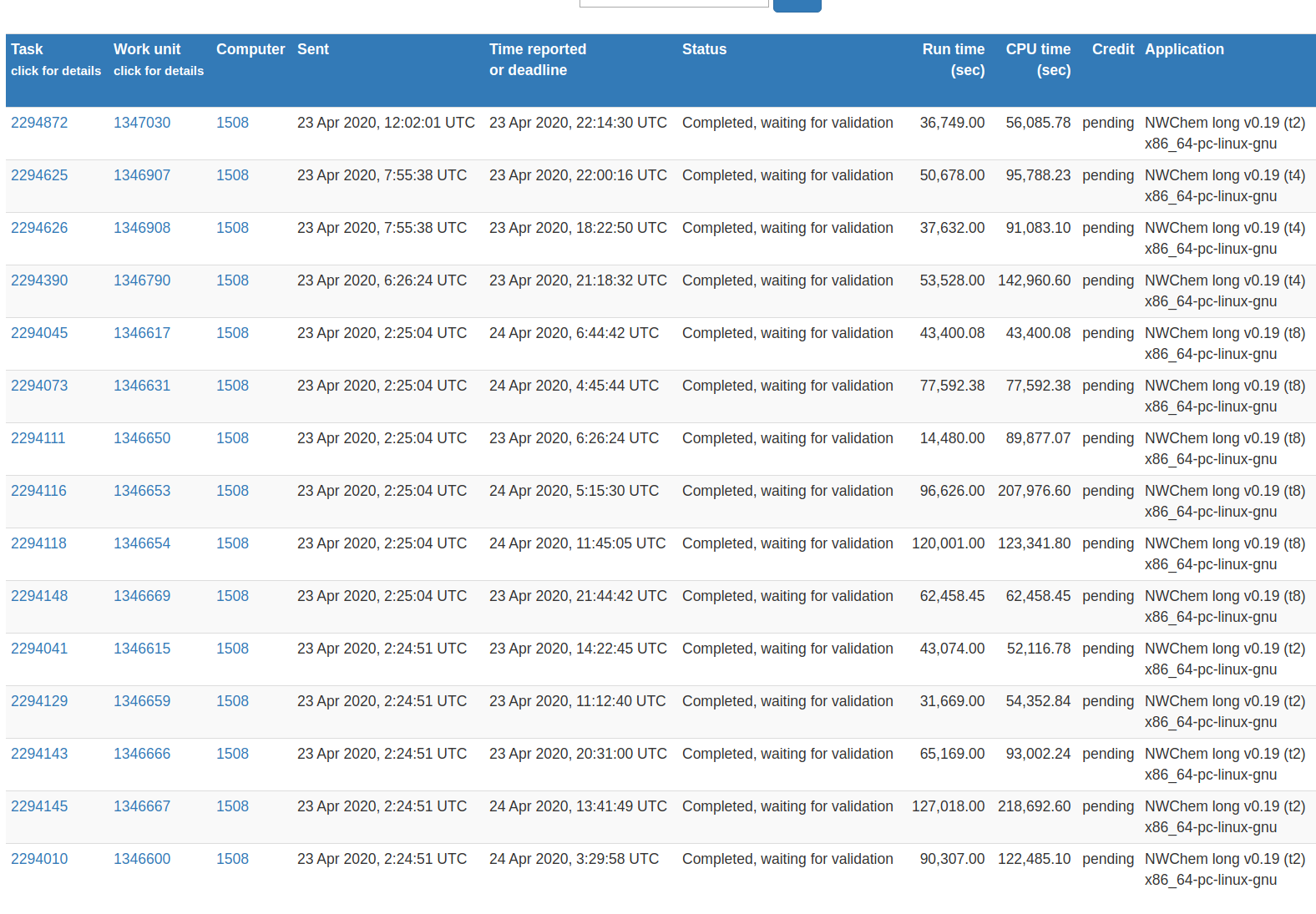

I see what you mean, but It doesn't feel like tasks sitting and not using CPUs, 8+8+4 exactly what I expect  some t8 on validation and CPU vs Run time doesn't look right as well =(  |

|

Send message Joined: 16 Dec 19 Posts: 25 Credit: 11,938,843 RAC: 0 |

Credit for long task are all fixed at the same value. You maybe overcommitting your CPU is you are using all the threads. You need to leave some overhead just in case. I set mine at 90% of all CPU. Homepage ---->computer preferences--->computing--->usage limits. Hopefully that will give better results. Z |

|

Send message Joined: 16 Nov 19 Posts: 44 Credit: 21,290,949 RAC: 0 |

If you set your CPU to 99%, and can see that one or more threads are running at below 50-75%, setting CPU to 100% is NOT over-committing the CPU! If on the other hand, some GPU projects report incorrect CPU usage (eg: they report 0.29CPU per GPU, and you have 3 GPUs, but in reality they're using 0.997CPU, then you're over committing the CPU. Setting CPU to 99% will still show 100% CPU utilization in this scenario. In most scenarios, an over committed CPU will not result in a task taking 3x longer to finish, since threads are always shuffled around. |

|

Send message Joined: 7 Feb 20 Posts: 10 Credit: 6,625,400 RAC: 0 |

Indeed I don't see a point in "overcommitting" I was running 100% CPU on different PCs for 5+ years and never saw any problems, maybe because of Linux :) Sometimes I have to turn off GPU when need to use virtual machines or free up to 2 threads to watch youtube at res higher than 720p30 |

|

Send message Joined: 7 Nov 19 Posts: 31 Credit: 4,245,903 RAC: 0 |

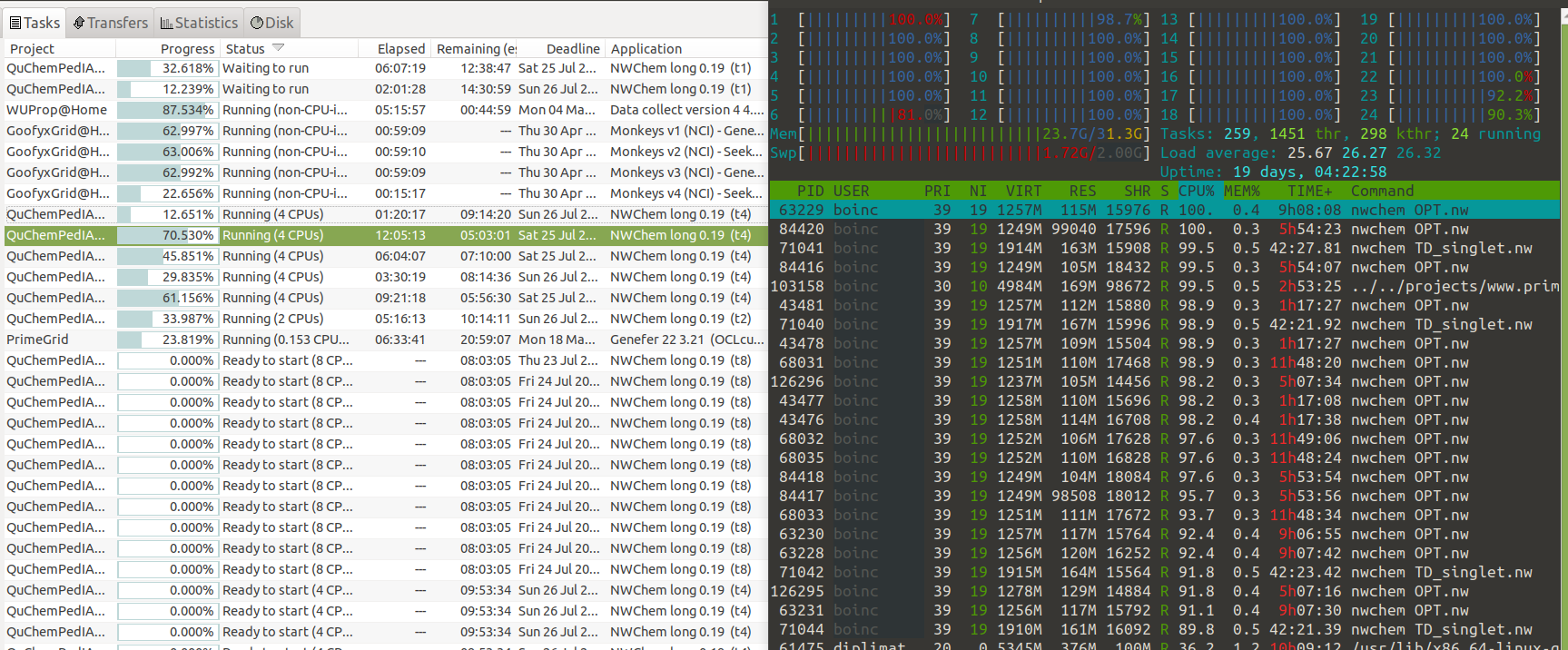

Have you tried to monitor processes list? Are there 20 (8+8+4) nwchem running at 100%? |

|

Send message Joined: 7 Feb 20 Posts: 10 Credit: 6,625,400 RAC: 0 |

Have you tried to monitor processes list? Are there 20 (8+8+4) nwchem running at 100%? it's not the best example now, only t4 and t2 are running  |

|

Send message Joined: 16 Nov 19 Posts: 44 Credit: 21,290,949 RAC: 0 |

Your CPu isn't overcommitted. Why do you use 2 CPUs on a WU? Is this a setting you have, or are you running beta projects? I only get single core WUs (1 CPU per WU). If you're not running the CPU at 100% yet, I'd increase the setting so 1 more core would be used. It appears Goofy, and Prime isn't using a lot of CPU resources. |

|

Send message Joined: 7 Feb 20 Posts: 10 Credit: 6,625,400 RAC: 0 |

Hi, ProDigit, there are no extra setting involved, I chose to run NWChem long and server sends me t1-t8 according ti its own judgment. I'm happy with current CPU workload. |

|

Send message Joined: 7 Nov 19 Posts: 31 Credit: 4,245,903 RAC: 0 |

|

|

Send message Joined: 16 Nov 19 Posts: 44 Credit: 21,290,949 RAC: 0 |

Again, to keep the conversation in line with the original OP's thread, The LONG WUs should get higher credit. Especially now that I see more and more units hitting 3 and even 4 or 5 days! in that timespan I could finish a whole lot of small WUs, and get a much more consistent score. I think QChemp needs to adjust the PPD scoring on these units. And perhaps should start thinking in pairing them in cpu core pairs instead (find a way to do parallel computing on those WUs. They take way too long! a 3,5 days task can easily be given to systems having 8CPU threads. It should be done in about 10 hours that way, which is way more reasonable! So find a way to create those WUs using parallel computing of 4 threads or more. I think 8 threads is easy to get, as most PCs nowadays have 8 threads. And leave the short WUs for PCs with less than 8 threads, doing WUs on a single thread like is now. I'm sure you could learn something from other projects, how they do it. Lots of projects serve 4, 6, 8, 10, 12, and even 16 core WUs. And they might do 24 soon, as it'll be more common, to find such amount of cores on a PC in the near future! |

|

Send message Joined: 27 Apr 20 Posts: 11 Credit: 714,200 RAC: 0 |

The problem is that no one is sure how long the WU will run, be it for 1, 2 or 3 days or more. So that is why there is a 5,000 fixed credit bonus for these work units. However if a work unit runs for 3 days using 4 cores, they are tied up for the whole 3 days, that's 24 hours x 3 = 72 and then x4 cores = 288 hours that those cores are in use. 5,000 credits is only 17.36 credits an hour, a bit low for the time spent working on the WU. If it ran for 2 days this jumps to 26 cr/h a bit better. On average the 5,000 is OK, as most work units don't run for days on end, for the ones that do it is on the low side. Conan |

|

Send message Joined: 27 Apr 20 Posts: 11 Credit: 714,200 RAC: 0 |

Well 5,000 points would of been great even though low for my long running work unit, but alas it was not meant to be. After 3 days 14 hours, tying up 4 cores during this time, the WU decided to error out with EXIT_CHILD_FAILED, whatever that means. Maybe when the site went down and the trickle up messages could not be sent? Very disappointed This WU Conan |

|

Send message Joined: 27 Nov 19 Posts: 1 Credit: 1,755,655 RAC: 0 |

I am sure this post will jinx me .. I have only run max cpus = 2. With the short tasks I had trouble with more than two threads and I never bother to change when the longs came available. The few I've run have run long (up to a day or little longer and down to half that) but I haven't experienced anything such as reported here. g Edit: Thinking back, when I moved to maxcpu = 2 is also when I ditched running this on windows. ymmv. |

©2025 Benoit DA MOTA - LERIA, University of Angers, France